Reinforcement Learning for bucketwheel reclaimer turn control

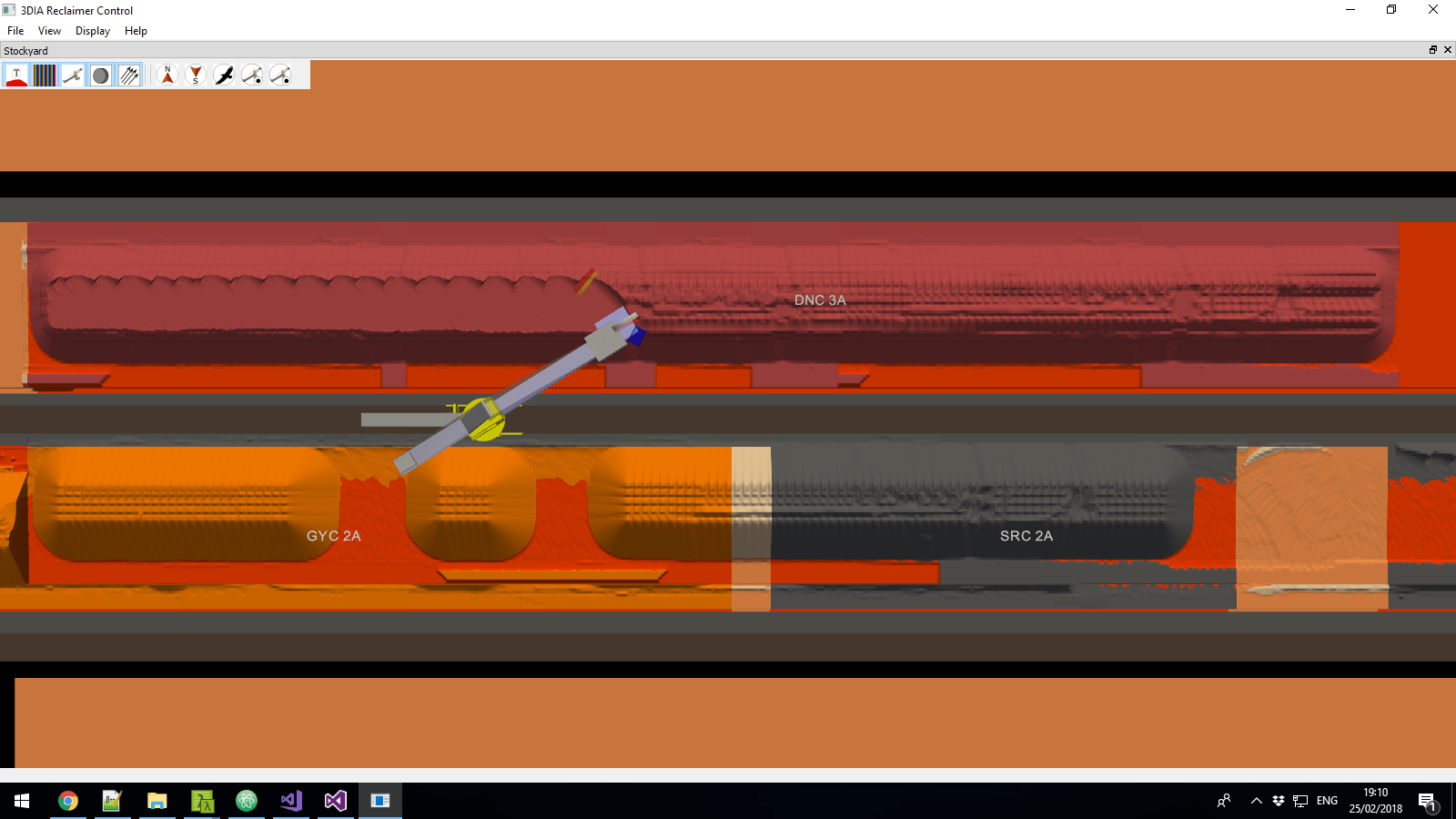

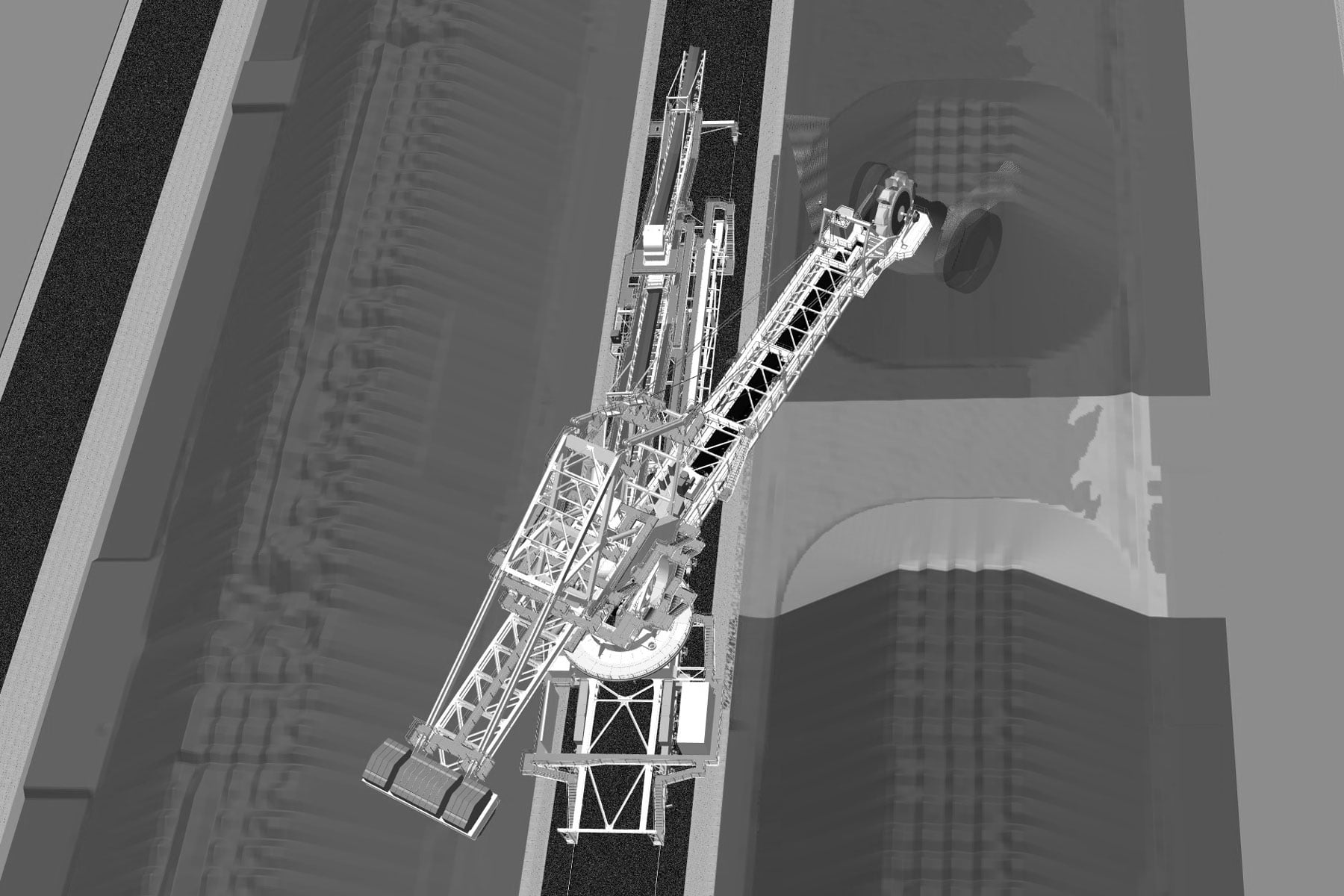

A bucketwheel reclaimer is a large machine used in bulk material handling applications such as iron ore loading. Three Springs applied reinforcement learning to controlling the slew and step speed on a bucketwheel reclaimer with much better performance than existing machine PLC control systems. Frontier Automation’s machine vision based 3DReclaim system routinely delivers more than 10% improvement in throughput when compared to standard machine PLC control. Applying a machine learning approach to the system provided the opportunity to allow for further improvements by utilizing the dense stockyard map data as a starting point for exploiting as yet untapped improvement potential particularly on the corners.

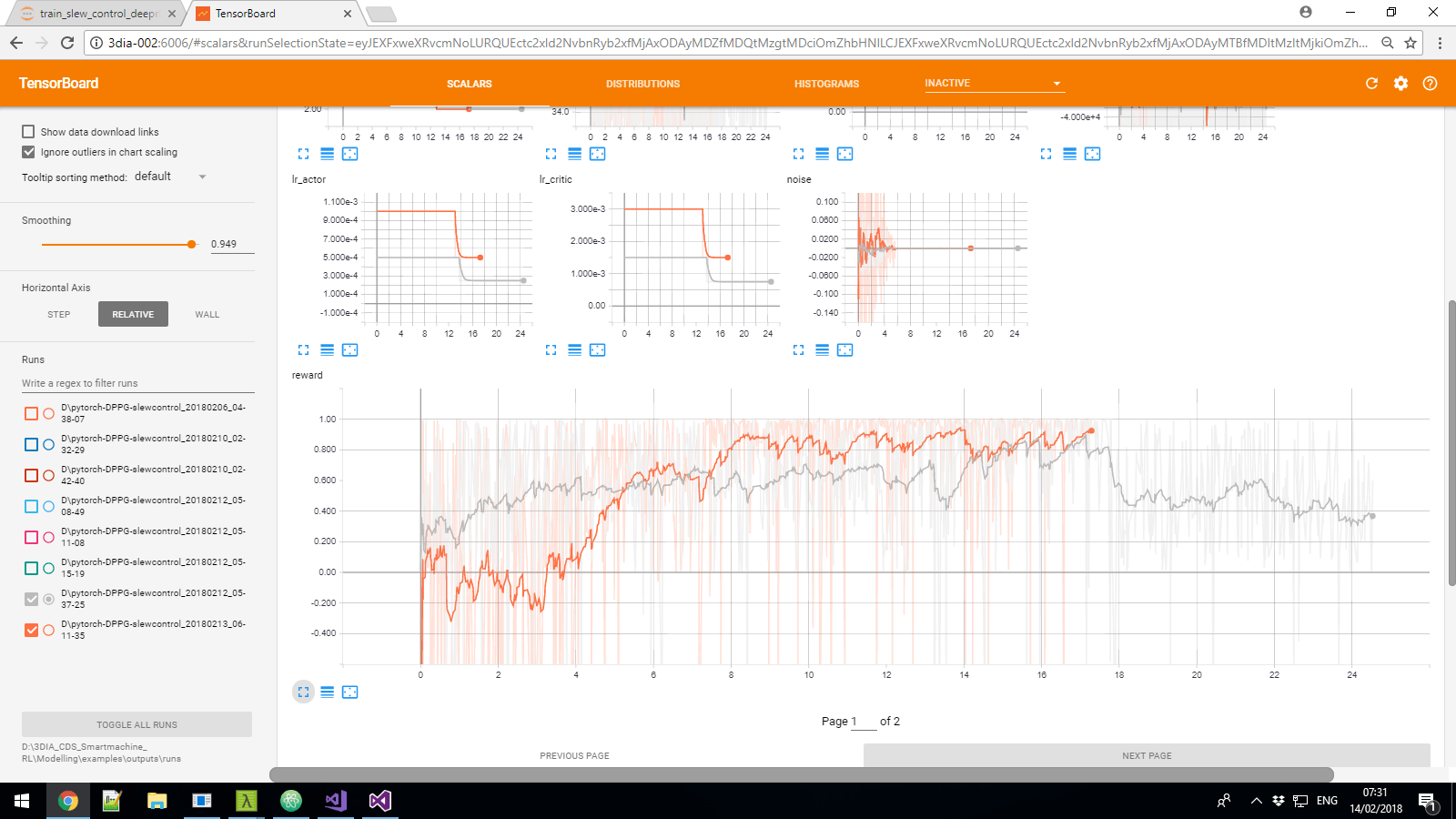

We took the 3DReclaim simulator and production control system by Frontier Automation and applied deep reinforcement learning. Due to the continuous nature of the actions and the need to reuse data we used a DDPG (Deep Deterministic Policy Gradients) model for controlling the slew and step speed. The successful policy trained on about three weeks of training data.

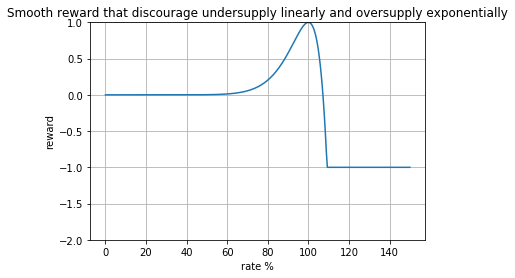

A key element was a reward curve that matches business and operational logic. This element of reinforcement learning is a clear advantage over incumbent control systems because we can design a non linear reward curve that reflects the business requirements. In the image below we wanted to smoothly discourage under-supply, but drastically discourage oversupply which can lead to the machine overloading, while also placing the reward peak at 100% of our target throughput.

Typical PLC based PID controllers must take a conservative approach because they can’t look ahead or fit to complex target curves. Our smart control system increases reclaim rate on corners with a much lower risk of halting downstream processing by overshooting its target rate.

The state and action speed are listed below:

Actions:

- Slew speed

- Step speed

State:

- Laser-based volume profile of the bench face ahead of the bucket wheel

- Hydraulic pressure feedback on the reclaimer wheel

- A lagging indicator of coal weight downstream

When compared to the baseline, a control system that is currently in production, our DDPG model learned how to optimize maximum yields at reclaimer turns. We estimate this translates to an additional increase in throughput at slew turns.

This work is an example of how reinforcement learning can be used to achieve better business outcomes on industry control problems. There are many advantages from increased performance, greater flexibility due to non-linearities, business logic embedded as custom reward functions, and potentially less tuning required when using online or active learning.

At Three Springs we look forward to building and deploying reinforcement learning control systems as an alternative to conventional control systems that capture more complex business requirements.

A view of performance tracking and internal monitoring which was performed in tensorboard.